|

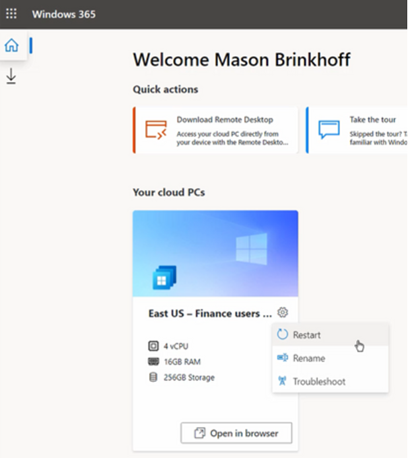

Windows 365 delivers a “Cloud PC” – literally a machine running Windows, which is remotely accessed by an end user and stays just like they left it when they disconnect, but is managed and secured centrally. As you may expect, there will be various SKUs depending on how capable you want it to be; Paul Thurrott opines that there will be many options, as “Microsoft is addicted to tiers”. General Availability is due on 2nd August; it’s sits on top of the existing

According to Mary Jo Foley, it will be reassuringly expensive so use cases will be carefully chosen rather than thinking everyone will sit at home running W365, accessing it over some ancient PC. For more details on machine sizing and the mechanics of provisioning and managing Windows 365, see here. Interesting examples given during the announcement were the remote government of Nunavut, or having hundreds of interns joining Microsoft for the summer; normally they’d come to the office and be given a PC but since they’re all at home, the cost and time burden of configuring the PCs and shipping them out would have been high. Instead, they’re given a virtual desktop via Windows 365 – created en masse in a few minutes – and they connect to that from whatever kind of device they already have at home. When their tenure is up, their access is removed and there’s no data left behind on their iPad/Mac/Chromebook or home PC. Maybe 2022 could finally be the Year of the Linux Desktop? For the rest of us; Windows 10 is still moving forward and the latest release due later this year has entered its latest stage of testing – Windows 10 21H2. And Windows 11 got another update to 22000.71, offering a variety of tweaks and polish. Even though Windows 10 is a modern OS with lots of great functionality, if you have already switched to Windows 11, using a machine with Win10 feels like going back in a time machine. |

Tag: Virtualisation

XP Mode in Windows 7 saved me money

I’ve been running Windows 7 at home for a while now, and have been very pleased with it – on a decent spec machine (Quad core, 4Gb RAM, lots of SATA-II disk etc), it absolutely flies. As did Vista before it, if truth be told.

When I got this machine, I had it set up to dual boot between Windows Vista x64 and XP Media Center, partly because I had some software that didn’t like Vista. ![]() One of the problem software/hardware combos was an oldish Canon 5000F scanner that gets used once every few months or so, but didn’t have 64-bit drivers available. It wasn’t enough hassle to make me want to go & buy a new scanner.

One of the problem software/hardware combos was an oldish Canon 5000F scanner that gets used once every few months or so, but didn’t have 64-bit drivers available. It wasn’t enough hassle to make me want to go & buy a new scanner.

On moving to Windows 7, I’ve just used the Virtual Windows XP, or “XP Mode” (which has now RTMed – available soon), function, which lets me run an XP virtual machine that has access to local resources like hard disks etc.

After firing up the Virtual XP instance, the scanner is listed under USB devices – the software was easy to install since the hard disks of the host machine are visible to the VM, and it was a snap to configure the Canon scanner software to save its output back into the Documents library of the host.

After firing up the Virtual XP instance, the scanner is listed under USB devices – the software was easy to install since the hard disks of the host machine are visible to the VM, and it was a snap to configure the Canon scanner software to save its output back into the Documents library of the host.

So all in all, a bit more trouble than if it just worked natively – but the XP Mode offers a solution to the gnarly problem of old hardware that isn’t being supported any more by its manufacturer. It certainly saved me the £50 or whatever it would take to buy a new scanner!

SMSE – a System Center light hidden under a bushel

SMSE – pronounced (in the UK at least) as ‘Smuzzy’, short for Server Management Suite Enterprise – is a licensing package from Microsoft, which can be an amazingly effective way to buy systems management software for your Windows server estate.

If you’re planning to virtualise your Windows server world, then SMSE is something of a no-brainer, since buying a single SMSE license for the host machine allows you to use System Center to manage not just the host but any number of guest (or child) VMs running on it.

Combine that with the license for Windows Server 2008 Datacenter Edition, which allows unlimited licensing for Windows Server running as guests, and you’ve got a platform for running & managing as many Windows-based applications servers as you can squeeze onto the box, running on any virtualisation platform.

System Center is the umbrella name given to systems management technologies, broadly encompassing:

- Configuration Manager (as-was SMS, though totally re-engineered), which can be used for software distribution and “desired configuration state” management … so in a server example, you might want to know if someone has “tweaked” the configuration of a server, and either be alerted to the fact or maybe even reverse the change.

- Operations Manager (or MOM, as it was known before this version), performs systems monitoring and reporting, so can monitor the health and performance of a whole array of systems, combined with “management packs” (or "knowledge modules” as some would think of them) which tell Ops Mgr how a given application should behave. Ops Mgr can tell an administrator of an impending problem with their server application, before it becomes a problem.

- Data Protection Manager – a new application, now in 2nd release, which can be used either on its own or in conjunction with some other enterprise backup solution, to perform point in time snap shots of running applications and keep the data available. DPM lets the administrator deliver a nearer RTO and more up to date RPO, at very low cost.

- Virtual Machine Manager – a new server, also in 2nd release, which manages the nuts & bolts of a virtual infrastructure, either based on Microsoft’s Hyper-V or VMWare’s ESX with Virtual Center. If you have a mixture of Hyper-V and VMWare, using VMM lets you manage the whole thing from a single console.

It’s easy to overlook managing of guests in a virtualised environment – the effort in doing such a project typically goes into moving the physical machines into the virtual world, but it’s equally important to make sure that you’re managing the operations of what happens inside the guest VMs, as much as you’re managing the mechanics of the virtual environment.

I’ve used a line which I think sums up the proposition nicely, and I’ve seen others quote the same logic:

If you have a mess of physical servers and you virtualise them, all you’re left with is a virtual mess.

Applying the idea of SMSE to a virtual environment, for one cost (at US estimated retail price, $1500), you get management licenses for Ops Manager, Config Manager, VMM and DPM, for the host machine and all of its guests.

Think of a virtualised Exchange environment, for example – that $1500 would cover Ops Manager telling you that Exchange was working well, Config Manager keeping the servers up to date and patched properly (even offline VMs), VMM managing the operation of the virtual infrastructure, and DPM keeping backups of the data within the Exchange servers (and maybe even the running VMs).

Isn’t that a bargain?

Virtuali(z)sation & datacenter power

It’s been very quiet here on the Electric Wand for the last month or so:

- I took a new job in December which means things have been pretty hectic at work. I’m now managing a new group, and lots of time spent building up a great team.

- Just back from Seattle from a week’s Microsoft internal technical conference.

- To be honest, I haven’t had much to talk about on the blog 🙂

The TechReady conference I went to in Seattle had a few interesting themes, but much of the technical stuff presented is still internal only so can’t be discussed (yet) online. A good chunk is probably "subject to change" anyway …

There are a few themes which were either covered in a number of different sessions, or which really made me think hard about the way IT is going – amongst them Virtualisation (I do hate using the "z", even though it’s technically OK – it just seems so un-British), the march towards multi-core parallelism (instead of clock speed race) and the whole Green IT agenda of power usage.

I’m planning to write a bit more about both these topics in current weeks, along with business case for Office Communications Server, but here’s some food for thought:

A major enterprise datacenter could well be consuming 10s of Megawatt/Hs of power – something that could be equated to many, many flights or other so-called demons of carbon emissions. A back-of-an-envelope calculation of all Microsoft’s own datacenter power usage (including all the online services) would equate to over 100 Jumbo Jet flights from London to Seattle every day. That’s 100 planes, not 100 passengers…

This power usage topic is one which is going to grow in importance – not just because power prices are rising (eg a 100 MW/h power usage for a large internet datacenter could easily cost more than £15m per annum in power costs alone). One project internally in Microsoft is looking at the actual power usage and the equivalent tonnes of CO2 emissions of all of its datacenters – a concept that’s surely to become more mainstream in the future.

Citing datacenters by renewable energy sources (such as Google’s massive datacenter by the Colorado river in Oregon) makes the power usage more palatable, but it doesn’t remove the need to reduce heat (and air conditioning requirements) and overall power usage – even if it means employing people to physically go round pulling the plugs at night-time on the myriad rack servers.

Anyway, as I said, more on this topic in coming weeks – in the meantime, I’ve not gone away … just waiting for the right time to pipe up 🙂

Tips for using Virtual PC and Virtual Server

Like many people who demo software technologies or who need to perform testing on multi-machine environments, I’ve been using Virtual PC and Virtual Server for years (and VMWare before that). If you’re unfamiliar with these two Microsoft products, both are free and can be used to conduct lab tests, play with new technologies or even run legacy applications in an old OS environment which may not be compatible with the latest OS and hardware. See Virtual PC and Virtual Server homepages for more information.

Once you’ve been using some Virtual Machines (VMs) for a while, the size of the hard disks can get a tad unweildy – one commonly used demo environment in MS has a Virtual Hard Disk (VHD) file in excess of 30Gb!

I routinely compress (at an NTFS level) the hard disk which hosts the VHDs, and try to hold them on a different physical disk from the host OS – it makes a huge difference to performance. I once ran an Exchange 2007 VHD on the 2nd disk in my laptop, and compared startup times when running off the 2nd disk (which was fastest), to holding on the primary disk along with the OS (slowest). It was quicker to even put the VHD on an 8Gb USB drive and run it from there, than holding it on the host HDD!

There are many places online where tips and tricks are displayed, but I came across Cameron Fuller’s blog recently, and he’s talked about lots of this stuff over the last year or two – if you’re thinking of doing anything serious with VPC or VS, check it out.

Here’s one of the more interesting points:

On Virtual PC disk writes were faster (57%) on a compressed drive, and disk reads were also faster (83%).

So there you have it. If running Virtual PC, definitely compress the VHD. In Cameron’s case, it was clear that his CPU was outstripping his disk I/O, so it was quicker for the PC to read a compressed file and then decompress it in RAM, than it was to read the whole thing uncompressed.

In Virtual Server, the case is slightly less clear cut – disk writes were slower (22%) but reads were faster (52%), so it may be less clear-cut, but still well worth considering, especially if you’re using VS in a training, lab or testing environment, when the dramatically smaller file sizes (both in terms of storage and also copying over the network) may even outweigh any slight performance degradation.

Virtual PC 2007 now available

The Virtual PC team released the latest version to the web the other day, and it’s available for free, downloadable from here. Headline changes over previous versions are the ability to be run on Windows Vista, and to have Vista as a guest OS within VPC as well as miriad performance improvements.

I’ve been using VPC 2007 in beta for a while and it’s been rock solid, and performs snappier than I recall VPC 2004 doing (though since VPC 2004 wasn’t happy running on Vista, it’s been a while).

More technical information on Virtual PC 2007 is available here.

Use NewSID on cloned virtualised machines

I came across a problem recently when a colleague was building a virtual Windows Server environment, and was reminded of it the other night when on a webcast with Exchange MVPs, when one of the attendees said he was hitting issues with Exchange 2007 servers not finding the Active Directory properly.

The solution lay at the heart of how the VM environment had been built – using a single source “base” OS image which was then configured to join the domain and had Exchange installed on it, for each machine in the environment.

If you’re building a multi-machine environment, it saves a lot of time if you build a single image and make sure it’s all patched up through Windows Update etc, then it’s just a matter of installing the Exchange (or whatever) servers once you’ve joined a copy of the VM into the domain.

Trouble is, when you install a new server (such as the base OS build), it creates a unique Security Identified (SID) which stays the same even if the machine is renamed and domain membership changed – whilst you’ll typically be able to join a cloned machine into the same domain, and it might look like it’s working OK, numerous strange things can happen – making it look as if the trust between the machine and domain is broken, or having problems authenticating to resources.

NewSID is a free tool that Mark Russinovich developed while at Winternals/SysInternals, and is now available from Microsoft since the acquisition of Mark’s company. The trick is to run NewSID on your cloned machine before joining the domain, and it will create a new, random, SID which means you won’t get clobbered later on with the kind of problems described above.

(NB: It’s worth noting that NewSID isn’t supported for production use – for that, you should really SysPrep the machines instead).

//E